Miss a day, miss a lot. Subscribe to The Defender's Top News of the Day. It's free.

“Misinformation” and “disinformation” derived from artificial intelligence (AI) — not conflict or poverty — are the top risks facing the world in the next two years, according to the latest World Economic Forum (WEF) Global Risks Report.

The WEF released the report on Jan. 10, just before its annual meeting in Davos, Switzerland.

Political and business leaders echoed these concerns throughout the event. More than 60 heads of state and 1,600 business leaders are among this year’s 2,800 participants from 120 countries — attendees referred to by WEF founder and chairman Klaus Schwab as the “trustees of the future.”

According to the Global Risks Report, “Foreign and domestic actors alike will leverage misinformation and disinformation to widen societal and political divides” in the next two years, posing a risk to elections in countries such as the U.S., U.K. and India.

“Misinformation” is listed in the report as the fifth greatest threat over the next 10 years.

“AI can build out models for influencing large populations of voters in a way that we haven’t seen before,” said Carolina Klint in an interview with CNBC. Klint is the chief commercial officer for Europe at the Marsh McLennan consulting firm, which co-produced the report.

Notably, “Artificial Intelligence as a Driving Force for the Economy and Society” is one of the “4 key themes” of this year’s WEF meeting. The central theme is “Rebuilding Trust.”

“The report suggests that the spread of mis- and disinformation around the globe could result in civil unrest, but could also drive government-driven censorship, domestic propaganda and controls on the free flow of information,” the WEF said.

The other top 10 risks for the next two years include “extreme weather events,” “societal polarization,” “cyber insecurity,” “interstate armed conflict,” “lack of economic opportunity,” “inflation,” “involuntary migration,” “economic downturn” and “pollution.”

WEF: ‘Misinformation,’ ‘disinformation’ may lead to ‘erratic decision-making’

A WEF Agenda article published in Time magazine and authored by Saadia Zahidi, the WEF’s managing director, summarized this year’s Global Risks Report, noting that it is “based on the views of nearly 1,500 global risks experts, policy-makers, and industry leaders.”

The world faces “some of the most challenging economic and geopolitical conditions in decades. And things may only deteriorate from here,” Zahidi wrote.

She explained why “false information” poses the biggest threat over the next two years, writing:

“The threat posed by mis- and disinformation takes the top spot in part because of just how much open access to increasingly sophisticated technologies may proliferate, disrupting trust in information and institutions.

“The boom in synthetic content that we’ve seen in 2023 will continue, and a wide set of actors will likely capitalize on this trend, with the potential to amplify societal divisions, incite ideological violence, and enable political repression.”

According to Zahidi, with some of the world’s largest countries holding elections this year, mis- and disinformation “could risk destabilizing the real and perceived legitimacy of newly elected governments,” while “manipulative campaigns” could “undermine democratic processes overall.”

“What’s more, false information and societal polarization are inherently intertwined, with potential to amplify each other,” she wrote.

“Polarized societies may become polarized not only in their political affiliations, but also in their perceptions of reality,” Zahidi added, claiming that it could lead to “continued strife, uncertainty, and erratic decision-making.”

For Zahidi, the solution to “both shorter- and longer-term risks” requires “innovation and trustworthy decision-making,” which she said “is only possible in a world with alignment on the facts.”

Zahidi made similar claims during a Jan. 11 interview with India’s CNBC-TV18.

WEF’s Managing Director Saadia Zahidi says mis- and disinformation are the #1 risk in the world today.

I agree completely. Yet who spews the most disinformation, lies and gaslighting propaganda – while engaging in massive censorship and suppression of truth?

– WEF

-… pic.twitter.com/CQGypo4DLU— J Kerner (@JKernerOT) January 18, 2024

Zahidi said that “misinformation” and “disinformation” are “very difficult to track, especially without tracking systems, watermarking systems, and especially without the public being well-educated about the risks of synthetic content and especially when that is fake news.”

“We need some solutions to be built between coalitions of the willing, and that’s what we’ll be working towards in Davos,” Zahidi said.

Musk: ‘Misinformation’ is ‘anything that conflicts’ with WEF agenda

The WEF’s claims elicited some heated responses, including from Elon Musk, chairman and chief technology officer of X (formerly known as Twitter) who, in a Jan. 11 post responding to the WEF’s Global Risks Report, wrote:

By “misinformation”, WEF means anything that conflicts with its agenda https://t.co/jiI7WsYnNb

— Elon Musk (@elonmusk) January 11, 2024

In a post on Substack, Steve Kirsch, founder of the Vaccine Safety Research Foundation, wrote that “world governments can stop misinformation” if they “stop lying to people,” “stop the censorship,” admit mistakes, and allow “all professionals, in all fields … to speak freely to the public without fear of retribution.”

But such views do not appear to be shared by the vast majority of WEF meeting participants.

Speaking to Euronews Tuesday, Věra Jourová, the European Commission’s vice president for values and transparency, threatened Musk with sanctions if X does not comply with the European Union’s newly introduced “Digital Services Act,” which regulates so-called “misinformation” and “disinformation” on social media platforms.

“After Mr. Musk took over Twitter with his freedom of speech absolutism, we are the protectors of freedom of speech as well,” Jourová said. “But at the same time, we cannot accept, for instance, the illegal content online and so on.”

Speaking at the WEF 2023 summit, unelected EU technocrat Věra Jourová, threatens Elon Musk with sanctions if the EU’s totalitarian censorship rules are not “complied with”.

“Our message was clear: We have the rules, which have to be complied with, and otherwise there will be… pic.twitter.com/XMJLVa3ll1

— Wide Awake Media (@wideawake_media) January 16, 2024

Ursula von der Leyen, president of the European Commission, told WEF attendees Tuesday that “disinformation and misinformation” are serious risks “because they limit our ability to tackle the big global challenges we are facing. And this makes the theme of this year’s Davos meeting even more relevant: Rebuilding trust.”

According to von der Leyen, who is not popularly elected, “Of course, like in all democracies, our freedom comes with risks. There will always be those who try to exploit our openness, both from inside and out. There will always be attempts to put us off track. For example, with disinformation and misinformation.”

Special Address by @vonderleyen, President of the @EU_Commission #wef24 https://t.co/Y18d81ZdHC

— World Economic Forum (@wef) January 16, 2024

For von der Leyen, “immediate and structural responses” are required ‘to match the size of the global challenges,” involving collaborations between governments and businesses — referring to public-private partnerships, a concept promoted by the WEF.

“Businesses have the innovation, the technology, the talents to deliver the solutions we need to fight threats like climate change or industrial-scale disinformation,” she said.

She also referred to the Digital Services Act, claiming it has “defined the responsibility of large internet platforms on the content they promote and propagate. A responsibility to children and vulnerable groups targeted by hate speech, but also a responsibility to our societies as a whole.”

According to von der Leyen, because the boundary between online and offline is “getting thinner and thinner … the values we cherish offline should also be protected online.”

Governments should ‘force’ social media companies ‘to do better’

Julie Inman Grant, a former Twitter employee who is now Australia’s “eSafety” commissioner, also attacked Musk and called for more social media regulation.

Speaking at a session Wednesday — “Protecting the Vulnerable Online” — Grant said agencies like hers “serve as that safety net” where the vulnerable can “report to us.”

Such reporting will trigger an investigation, Grant said, and “collaboration with platforms” will “ensure swift actions.”

She added:

“We’re also very focused on transparency, and we’ve used our transparency powers to really find out what’s happening under the hood.

“We’ve just issued [sanctions] against X Corp. around online hate, where we were able to really get a sense of the extent to which they cut their safety engineers by 80%, their content moderators by 30%, their public policy people by 70% and then they enabled previously suspended users.”

WATCH: Australia’s eSafety Commissioner Julie Inman Grant just said at the World Economic Forum that @elonmusk‘s firing of online hate speech censors at @X was like blocking ambulances and allowing dangerous drivers onto the road. pic.twitter.com/g6syKkuGI1

— Cosmin Dzsurdzsa 🇷🇴 (@cosminDZS) January 17, 2024

Praising attempts by various countries to regulate social media platforms, Grant suggested that governments should “force” social media companies to police speech on their platforms.

“We as governments have to work together to counter the wealth, the stealth, and frankly, the power of all these technology companies, and we need to work together to really force them to do better,” she said.

At the 2022 WEF meeting in Davos, Grant said that a “recalibration” of freedom of speech is needed.

Australian eSafety commissioner Julie Inman Grant tells the World Economic Forum we need a “recalibration” of freedom of speech. pic.twitter.com/zEq72wFhNf

— Andrew Lawton (@AndrewLawton) May 23, 2022

At this year’s WEF meeting, she criticized an injunction issued in the U.S. as part of the Missouri et al. v. Biden et al. lawsuit, preventing White House and government officials from speaking with social media companies about censoring content on their platforms.

She said:

“There is currently an injunction in place stopping the Biden administration from communicating with social media platforms about interference threats on the topics of elections that’s actually going before the United States Supreme Court this year.

“So we’re in this bizarre environment where right as the threats are ticking up, the investments in actually doing the day-to-day work of online trust and safety for our information environment is being scaled back and is under attack.”

The Australian government is currently considering enacting new powers to “combat misinformation and disinformation,” widely referred to as a “misinformation bill.”

On the same panel, Maurice Lévy, chairman of the supervisory board for Publicis Groupe, one of the world’s largest marketing and public relations firms, credited collaborations borne out of previous years’ WEF meetings for increased moderation and removal of “misinformation” and “disinformation” on social media platforms.

He said:

“Some eight, nine years ago, we decided — the five top global agencies with some 30 top advertisers — in a meeting here in Davos [to] ask Facebook … YouTube, Google to participate, and there have been some rules which have been extremely strongly made by the advertisers and the agencies. We don’t want our ads to be next to violence, terror, etc.

“We implemented such strong rules … that YouTube has been forced to create moderation, which they had not before, and to clean up the content.”

The Chairman of Publicis Groupe gloats about how advertizers can force social media companies to moderate content they don’t like. pic.twitter.com/Fmzrw6mDrN

— True North (@TrueNorthCentre) January 17, 2024

Publicis Groupe’s clients include Big Pharma companies such as GlaxoSmithKline, Pfizer Consumer Healthcare, Merck, AstraZeneca, Johnson & Johnson and Purdue Pharmaceuticals, makers of Oxycontin, and Big Tech companies such as Google, Amazon and Microsoft.

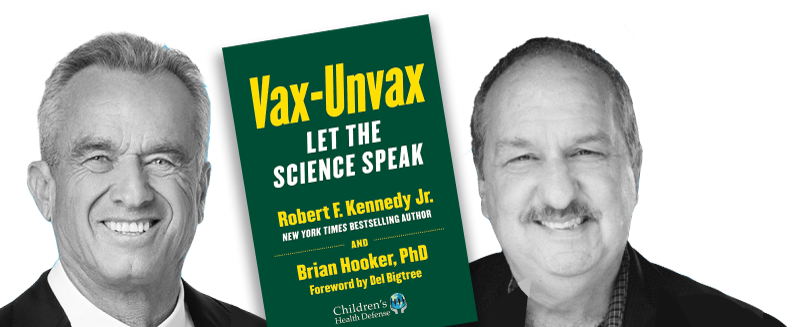

Publicis Groupe also collaborates with “fact-checking” firm NewsGuard and with the Center for Countering Digital Hate, authors of the “Disinformation Dozen” list which was used to pressure social media platforms to censor true content posted by figures such as Robert F. Kennedy Jr., chairman on leave of Children’s Health Defense, during the COVID-19 pandemic.

‘You get them to places like Davos’

Alexandra Reeve Givens, president and CEO of the Center for Democracy & Technology (CDT) suggested during a session Wednesday that executives of social media companies be brought to Davos to discuss “interventions” combating “misinformation” and “disinformation.”

The CEO for the Center for Democracy and Technology is asked on a World Economic Forum panel how to force social media companies to ramp up online trust and safety initiatives.

Answer: “You get them to places like Davos.” pic.twitter.com/BgT1izWVTf

— Andrew Lawton (@AndrewLawton) January 16, 2024

Responding to a question by Ravi Agrawal, editor-in-chief of Foreign Policy, if there is a way to “force” or “push” social media companies to police “misinformation” and “disinformation,” Givens said, “You get them to places like Davos.”

She added:

“There are important lessons that we learned after 2016, [that] social media companies learned about how you track mis- and disinformation campaigns, what coordinated inauthentic activity looks like on a network, how you put breaks in, how when a rumor is flying you get people to check whether or not have you read this article before you forward it to fact-checking programs.

“That architecture, it hasn’t been a silver bullet by a long shot, but at least that architecture has been in place and there’s an entire academic field now that studies this and analyzes what interventions might look like. We have to make sure that those interventions are still in place this year as a bare minimum for us to be able to navigate this landscape.”

Julie Brill, Microsoft’s corporate vice president and deputy general counsel, is a member of CDT’s board of directors. CDT’s advisory council includes figures from Meta — the parent company of Facebook, Amazon and the Stanford Cyber Policy Center, which hosted The Virality Project that, according to the “Twitter Files,” played a key role in social media censorship during the COVID-19 pandemic.

At a WEF panel discussion Monday titled, “Open Forum: Liberating Science,” Carlos Afonso Nobre, Ph.D., senior researcher at the University of São Paulo’s Institute of Advanced Studies, said it is difficult for him, as a climate scientist, “to understand misinformation, disinformation.”

Australia’s controversial ‘eSafety Commissioner’ pushes for stricter online regulations at WEF https://t.co/ay9377i72p

— Yanky 🇺🇲 (@Yanky_Pollak) January 18, 2024

Referring to his home country of Brazil, Nobre said, “A lot of people did not take vaccination” — referring to the COVID-19 vaccines. He blamed this on an “erosion of trust” in science which he said was fueled by “misinformation” and “disinformation.”

Nobre said it’s important to understand how not to communicate science topics and to understand “why some people still do not believe” in such narratives.

Outside the confines of the WEF meeting, attendees were less forthcoming with their views.

Rebel News journalist Avi Yemini confronted Meta executives Alex Schultz, the company’s chief marketing officer and vice president of analytics, and Javier Olivan, Facebook’s chief operating officer, on the streets of Davos with questions about “fact-checking,” “transparency” and the company’s efforts to target “misinformation” and “disinformation.”

Meta executives EVADE questions on censorship and political interference at Davos

Social media elites peppered with questions over growing censorship concerns.

High-ranking Meta executives ducked questions from Rebel News on the streets of Davos as they retreated to a secure… pic.twitter.com/iJzDDOH4vm

— Rebel News (@RebelNewsOnline) January 18, 2024

The two executives evaded the questions, instead telling Yemini that they are “not engaging” with him.